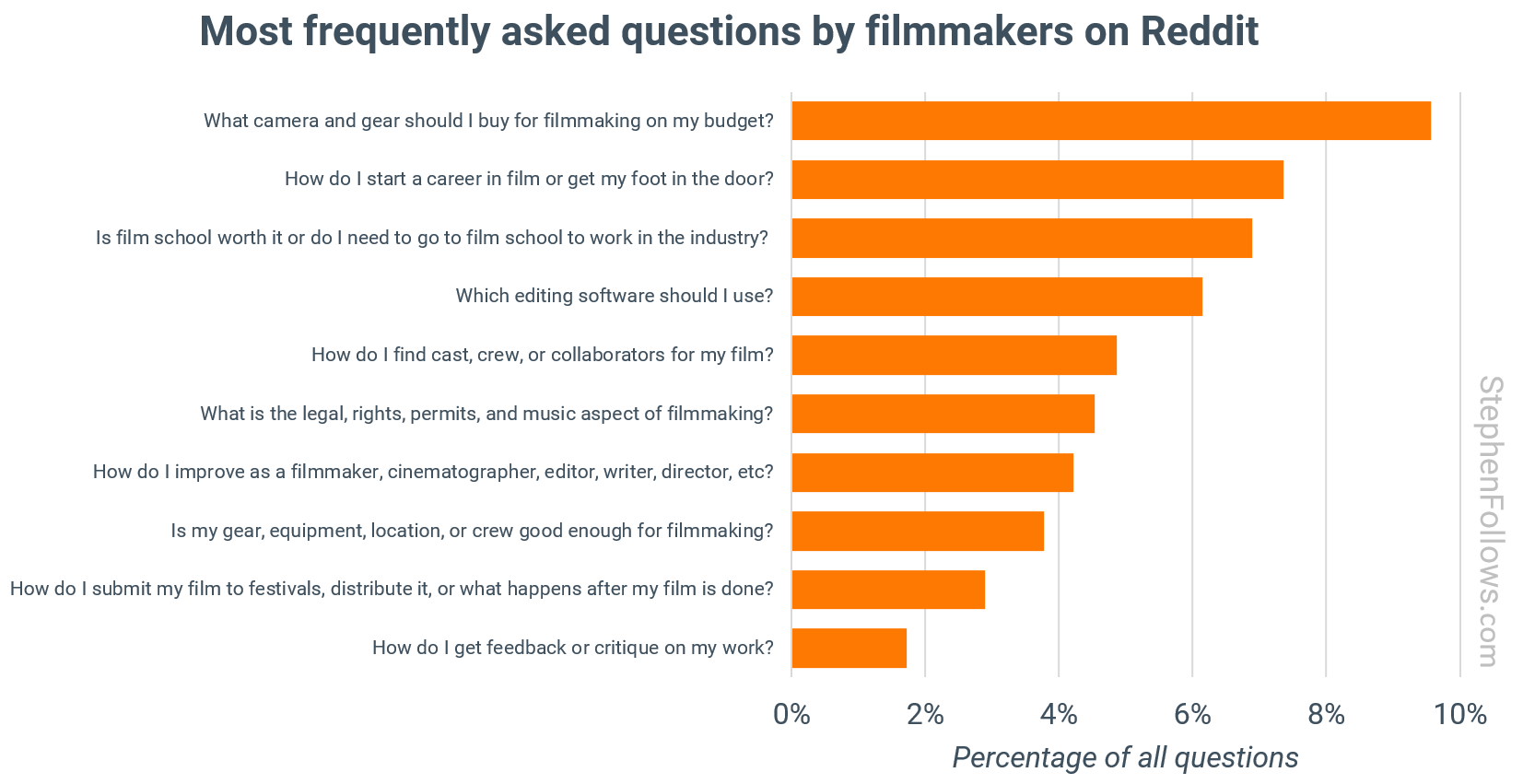

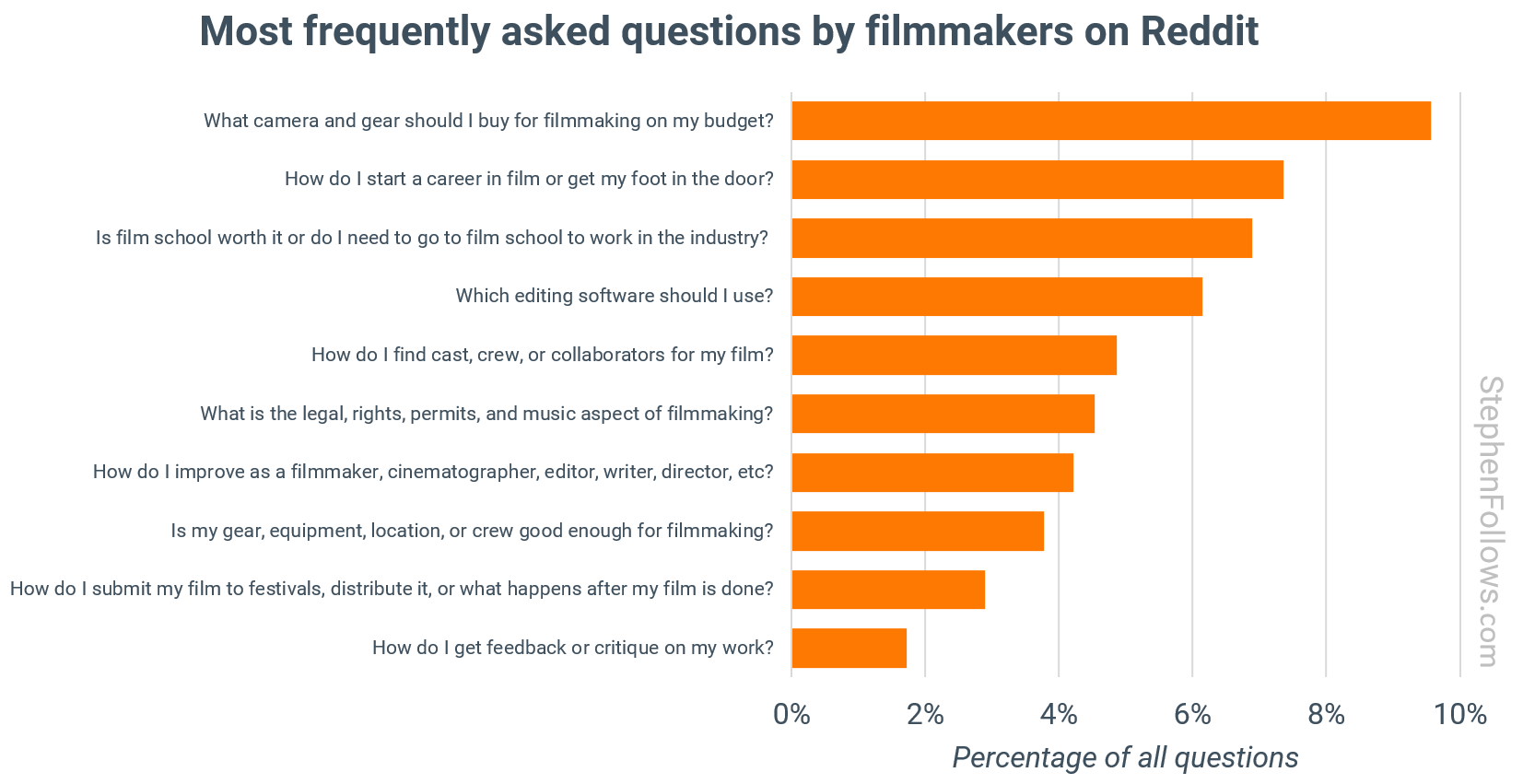

Stephen Follows analyzed over 160,000 questions on Reddit to uncover what filmmakers really ask, need and struggle with.

Amazingly, 10 questions accounted for 52% of the total. They are, quoting Stephen:

“1. What camera and gear should I buy for filmmaking on my budget?

The search for the “right” camera and kit never ends, no matter how much technology shifts. People want to know what will give them industry-standard results without breaking the bank. The conversation includes price brackets, compatibility, and whether brand or model really matters to a film’s success.

2. How do I start a career in film or get my foot in the door?

This is the practical follow-up to the film school debate. Filmmakers want straight answers about first jobs, entry points, and which cities or skills lead to real work. Many people are looking for pathways that do not depend on family connections or luck.

3. Is film school worth it or do I need to go to film school to work in the industry?

Filmmakers want clarity on the value of a formal degree versus real-world experience. They are trying to weigh debt against opportunity and want to know if there are shortcuts, hidden costs, or alternative routes into the business.

4. Which editing software should I use?

Software choice raises both budget and workflow issues. Filmmakers want to know which tools are worth learning for professional growth. Questions focus on cost, features, compatibility, and what is expected in professional settings.

5. How do I find cast, crew, or collaborators for my film?

Building a team is a constant sticking point. Most low-budget filmmakers do not have a professional network and are looking for reputable ways to meet actors, crew, or creative partners. Trust and reliability are major concerns, as is the need for effective group communication.

6. What is the legal, rights, permits, and music aspect of filmmaking?

Legal uncertainty is widespread. Filmmakers are confused about permissions, copyright, insurance, and protecting their work and collaborators. They want step-by-step advice that demystifies the paperwork.

7. How do I improve as a filmmaker, cinematographer, editor, writer, director, etc?

Self-development is a constant thread. Filmmakers search for the best courses, books, tutorials, and case studies. Clear recommendations are valued and people want to know what separates average work from great films.

8. Is my gear, equipment, location, or crew good enough for filmmaking?

Questions about minimum standards reflect deeper anxieties about competing in a crowded field. People want reassurance that their toolkit will not hold them back and want to know how far they can push limited resources.

9. How do I submit my film to festivals, distribute it, or what happens after my film is done?

People want clear instructions on taking their finished work to the next level. Festival strategies, navigating submissions, and understanding distribution channels are a minefield. Filmmakers want to know how to maximise exposure and what steps make the biggest difference.

10. How do I get feedback or critique on my work?

Constructive criticism is in high demand. Filmmakers want practical advice on scripts, edits, and showreels. They look for honest reactions to their work and advice on how to keep improving.”

My take: my answers:

- The camera on your smartphone is totally adequate to film your first short movie.

- Make your own on ramp by creating a brand somewhere online with a minimum viable product – you need to specialize and dominate that niche. Or move to a large production centre.

- Maybe, if you can afford it and you’re a people person. Otherwise, spend the money on your own films because every short film is an education unto itself.

- Davinci Resolve. Free or Studio.

- Your local film cooperative. Don’t have one? Start your own.

- Google is your friend. Don’t sweat it too much (and create your own music) for your first short festival films. As soon as your product becomes commercial, you need an entertainment lawyer on your team.

- Watch movies, watch tutorials, make weekend movies to practice techniques, challenge yourself. Just do it.

- See Answers One and Seven. Note: this is an audiovisual medium; audiences will forgive visuals that fall short but WILL NOT forgive bad sound. Luckily, great sound is easily achievable today.

- FilmFreeway.com

- Send me a link to your screener; I’ll watch anything and give you free notes on at least three things to improve.