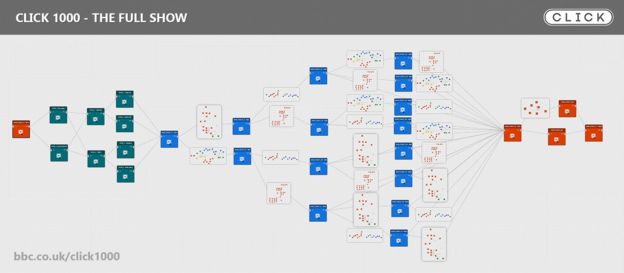

Jacob Fox on PCGamesN suggests that new tech from Intel Labs could revolutionise VR gaming.

He describes:

“A new technique called Free View Synthesis. It allows you to take some source images from an environment (from a video recorded while walking through a forest, for example), and then reconstruct and render the environment depicted in these images in full ‘photorealistic’ 3D. You can then have a ‘target view’ (i.e. a virtual camera, or perspective like that of the player in a video game) travel through this environment freely, yielding new photorealistic views.”

David Heaney on Upload VR clarifies: “Researchers at Intel Labs have developed a system capable of digitally recreating a scene from a series of photos taken in it.”

“Unlike with previous attempts, Intel’s method produces a sharp output. Even small details in the scene are legible, and there’s very little of the blur normally seen when too much of the output is crudely ‘hallucinated’ by a neural network.”

Read the full paper.

My take: this is fascinating! This could yield the visual version of 3D Audio.